A while back I started using Rasberry Pi systems to monitor environmental data around the house. I tried several "hats" that included different sensors. Most read temperature; some could check humidity, luminosity, and barometric pressure, allegedly.

The sensors I ended up liking the most connect to the Pi via the SparkFun QWIIC connection. The "all-in-one" sensors suffered by being too close to the Pi CPU, necessitating adjustments to the readings to compensate for the excess heat. Putting sensors just an inch or 2 away (5 cm) avoided that.

After getting ambient in-house temperature readings and placing sensors in places like the water heater pipes and the clothes dryer door let me check out energy efficiency, in a way. Or just seeing when and for how long we use high energy appliances. The local electric utility has hourly metrics I can download; a data acquisition story for another time.

With inside conditions measured I thought about putting a Pi sensor outside, and got as far as placing one temperature sensor in a window. But that is only on the edge of "outside" and gets some heat from the building instead of the atmosphere. I looked at getting a full-fledged weather station [ see: https://blog.netbsd.org/tnf/entry/the_geeks_way_of_checking ], then decided the investment wasn't necessary. There's a full-fledged airport meteorological station at a nearby airport which publishes ambient conditions that suit my needs [Insert George Carlin's joke about airport weather: nobody cares about the airport; downtown is on fire!].

Among other published data streams, there is a set that has evolved from early web days of FTP content into HTML pages that contain plain text (and bear "ftp" in their URL).

The site I am using is "KMTN"; many many others are there for the browsing. A few hundred sites have data not updated since 2008, interestingly.

Yes, you could use curl or wget, but I like Lynx:

# get metar data into a file

/usr/pkg/bin/lynx -dump https://tgftp.nws.noaa.gov/data/observations/metar/decoded/${SITE}.TXT > $DATAFILE

This file looks like:

Baltimore / Martin, MD, United States (KMTN) 39-20N 076-25W

Mar 27, 2024 - 01:57 PM EDT / 2024.03.27 1757 UTC

Wind: from the S (180 degrees) at 3 MPH (3 KT):0

Visibility: 2 mile(s):0

Sky conditions: overcast

Weather: heavy rain

Temperature: 46 F (8 C)

Dew Point: 44 F (7 C)

Relative Humidity: 93%

Pressure (altimeter): 30.19 in. Hg (1022 hPa)

ob: KMTN 271757Z 18003KT 2SM +RA OVC009 08/07 A3019

cycle: 18

Handy text data with a plethora of environmental conditions. The "ob" character string has some of this data of interest to pilots.

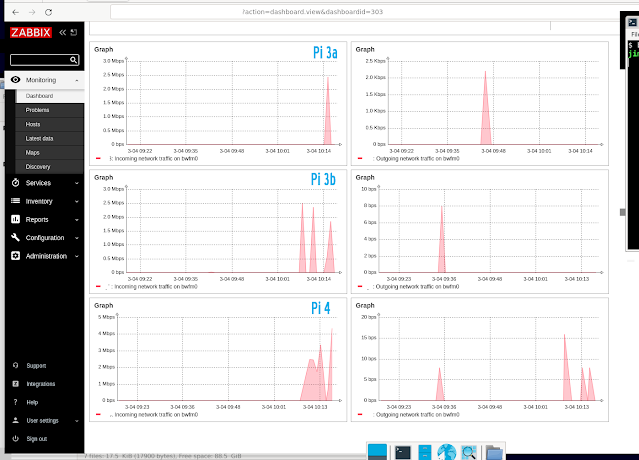

Zabbix

When I hooked up different Pi hats they typically included some code to gather the data, and I found ways to push/pull the data into a Zabbix monitoring suite. I leveraged published templates that included a variety of readings under one umbrella. The Sparkfun template came from?

I borrowed a shell script logic flow from Bernhard Linz:

# Script for Monitoring a Raspberry Pi with Zabbix

# 2013 Bernhard Linz

# Bernhard@znil.de / http://znil.net

#

# Sat Feb 5 15:12:21 UTC 2022 : translation from Linux to NetBSD

# Tue Jul 5 21:05:42 UTC 2022 : back to suse

# Sun Jul 17 01:50:10 AM UTC 2022 : sparkfun

A Sparkfun template from 2022:

<?xml version="1.0" encoding="UTF-8"?>

<zabbix_export>

<version>5.0</version>

<date>2022-11-04T20:48:52Z</date>

<groups>

<group>

<name>RaspberryPi</name>

</group>

</groups>

<templates>

<template>

<template>Sparkfun</template>

<name>Sparkfun</name>

<description>Pi Hat with display</description>

<groups>

<group>

<name>RaspberryPi</name>

</group>

[...]

When I started working on code to push airport conditions into Zabbix I decided to use the trapper mechanism, set up individual items as environmental parameters and ignored the idea of a template. That was fine for just one Zabbix system, and probably okay for a 2-system landscape, but when I decided to add a third, I realized copying the definitions to another system wasn't as easy as exporting a template and importing it into another system. And expanding the set made it more complex. I looked at template definitions inside Zabbix itself, finding an obscure reference to a template generator, a dead end for me (Template tooling version used: 0.38). I figured I would hand roll a template from the many examples.

All the way back to Zabbix 2.0 was this handy sample:

After a bit of trial-and-error I created a workable import file; the main difficulty was complaints if I copied one item to another without altering the UUID. There's probably a better way. Once I had items representing the airport conditions I was already gathering I added others that could be interesting, leaving out things like "ceiling". An extract follows:

<item>

<uuid>9d798f42e46b450f85edd27c0bb83ae7</uuid>

<name>Ambient Wind Speed</name>

<type>TRAP</type>

<key>enviro[Wind.Speed]</key>

<delay>0</delay>

<value_type>FLOAT</value_type>

<units>MPH</units>

<description>Ambient Wind Speed in MPH</description>

<tags>

<tag>

<tag>Application</tag>

<value>Environment</value>

</tag>

</tags>

</item>

<item>

<uuid>9d798f42e46b450f85edd27c0bb83ae6</uuid>

<name>Ambient Wind Direction in Degrees</name>

<type>TRAP</type>

<key>enviro[Wind.Direction]</key>

<delay>0</delay>

<value_type>UNSIGNED</value_type>

<units>degrees</units>

<description>Ambient Wind Direction in Degrees</description>

<tags>

<tag>

<tag>Application</tag>

<value>Environment</value>

</tag>

</tags>

</item>

<item>

<uuid>9d798f42e46b450f85edd27c0bb83af6</uuid>

<name>Ambient Wind Direction N-S-W-E Compass Rose</name>

<type>TRAP</type>

<key>enviro[Wind.Rose]</key>

<delay>0</delay>

<value_type>TEXT</value_type>

<description>Ambient Wind Direction Rose</description>

<tags>

<tag>

<tag>Application</tag>

<value>Environment</value>

</tag>

</tags>

</item>

I like this example as it includes float, unsigned, and text, to check if data transforms and transfers work as intended. The "key" is the critical design component for storing and retrieving values. I decided to include everything under one array, and name the pointers with capitals, separating similar parameters with a period, so the wind values start with "Wind.". Other conventions include dashes, or just characters.

Because the airport team updates their site hourly that is the data resolution; collecting more than once per hour would generate flat lines between the hours, and fill the database with redundancies. I set up one cron job to pull the data and one to push it into Zabbix. There are probably some error conditions I should trap, like with FIOS is not working as it should.

CRON

[ ... ] parse-metar.sh >load-metar.sh

The parse phase is a set of grep commands, followed by sed, then awk, based on the data file retrieved first.

The lines for the 3 wind values:

grep "^Wind: " $DATAFILE | sed -e "s/(//g" -e "s/)//g" | awk '{print ""ENVIRON["ZABBIX_SEND"]" enviro[Wind.Rose] -o " $4}'

grep "^Wind: " $DATAFILE | sed -e "s/(//g" -e "s/)//g" | awk '{print ""ENVIRON["ZABBIX_SEND"]" enviro[Wind.Direction] -o " $5}'

grep "^Wind: " $DATAFILE | sed -e "s/(//g" -e "s/)//g" | awk '{print ""ENVIRON["ZABBIX_SEND"]" enviro[Wind.Speed] -o " $8}'

To make the script a little tidier, this version puts the zabbiz_sender command into an environment variable. Others might use a Bash-ism... The trickiest part was capturing the cryptic observation codes with embedded spaces, as multiple values need to be quoted for Zabbix to store properly.

export ZABBIX_SEND="/usr/pkg/bin/zabbix_sender -vv -z "${ZABBIX_SERV}" -p 10051 -s "${ZABBIX_HOST}" -k "

The server is the Zabbix system, and the host is the system in Zabbix that gets the data. It could be any system; I chose a file server as quite likely to stay available most of the time.

The load script is the standard output from the parse phase. Again. little error checking; if the data file doesn't exist the load fails and leaves a gap in the record.

LOAD

If everything works, the load into Zabbix shows 1 processed per record:

zabbix_sender [22659]: DEBUG: answer [{"response":"success","info":"processed: 1; failed: 0; total: 1; seconds spent: 0.000018"}]

Response from "zab.bix:10051": "processed: 1; failed: 0; total: 1; seconds spent: 0.000018"

sent: 1; skipped: 0; total: 1

TEMPLATE

Here is how my environmental template looks now:

Adding additional keys to the template did not include them in the host if a prior version was used, but deleting and re-adding the template seemed to work.

GRAPHS

One "interesting" flaw I noticed when viewing graphs of the measurements was duplicate Y-axis values.

I saw this in Zabbix 6.0, 6.2 and 6.4; the later versions have improved axes labeling with color differentiators for "top of the hour" moments.

6.0

6.4

If the Y range is large enough, the values are distinct. A close-up of the values when too close together:

Maybe there is a bug report on this; haven't looked yet.

VERSIONS

I've gone backwards in Zabbix versions after getting 6.2 and 6.4 working on FreeBSD, because I wanted to use NetBSD, which has 6.0 in pkgsrc applications. I've not found too many obstacles moving definitions among the versions, where the bigger challenge is changes in layout or other user experience factors. "Configuration" is now "Data Collection".

The "knot" joke in the post title is that I skipped over wind speed in knots, not being a son of a son of a sailor.